LLM Access Shield: Preventing Data Leakage & Undesirable Responses

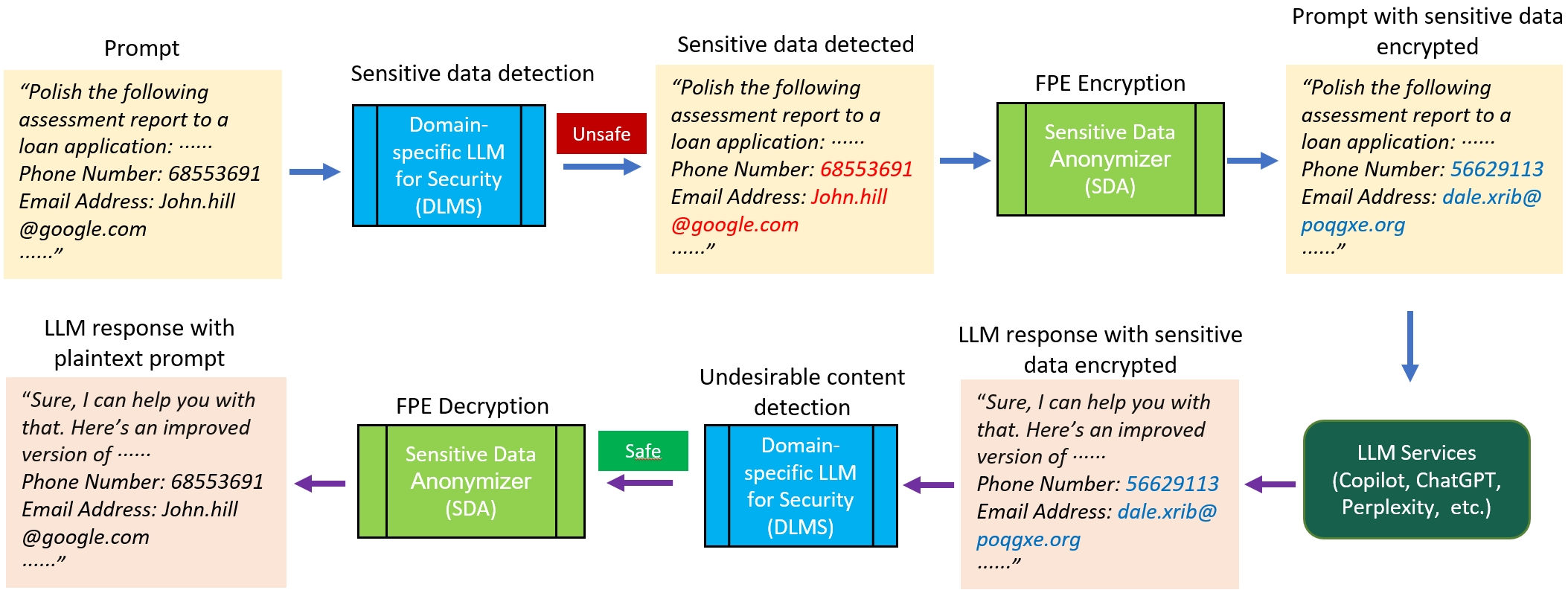

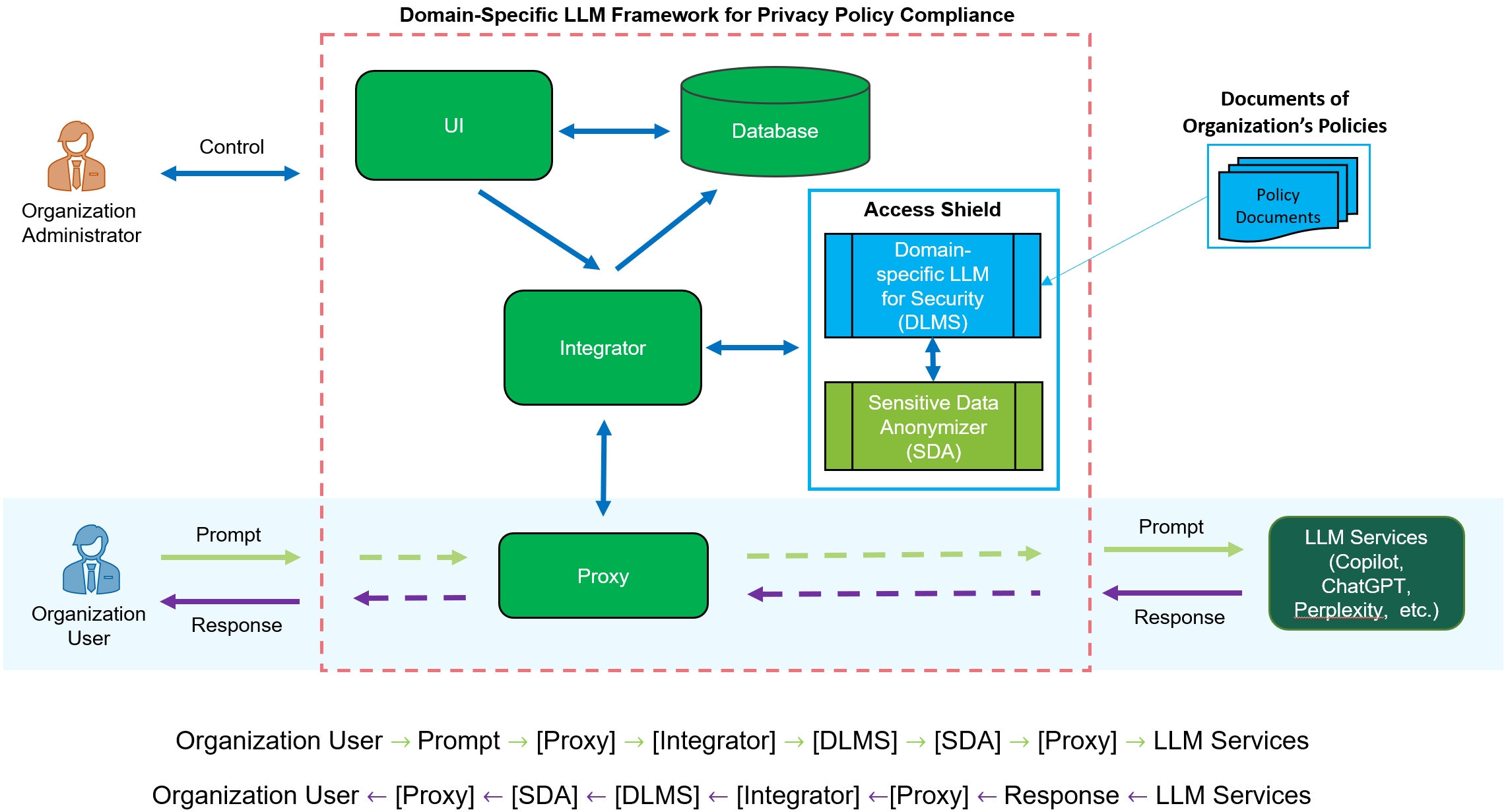

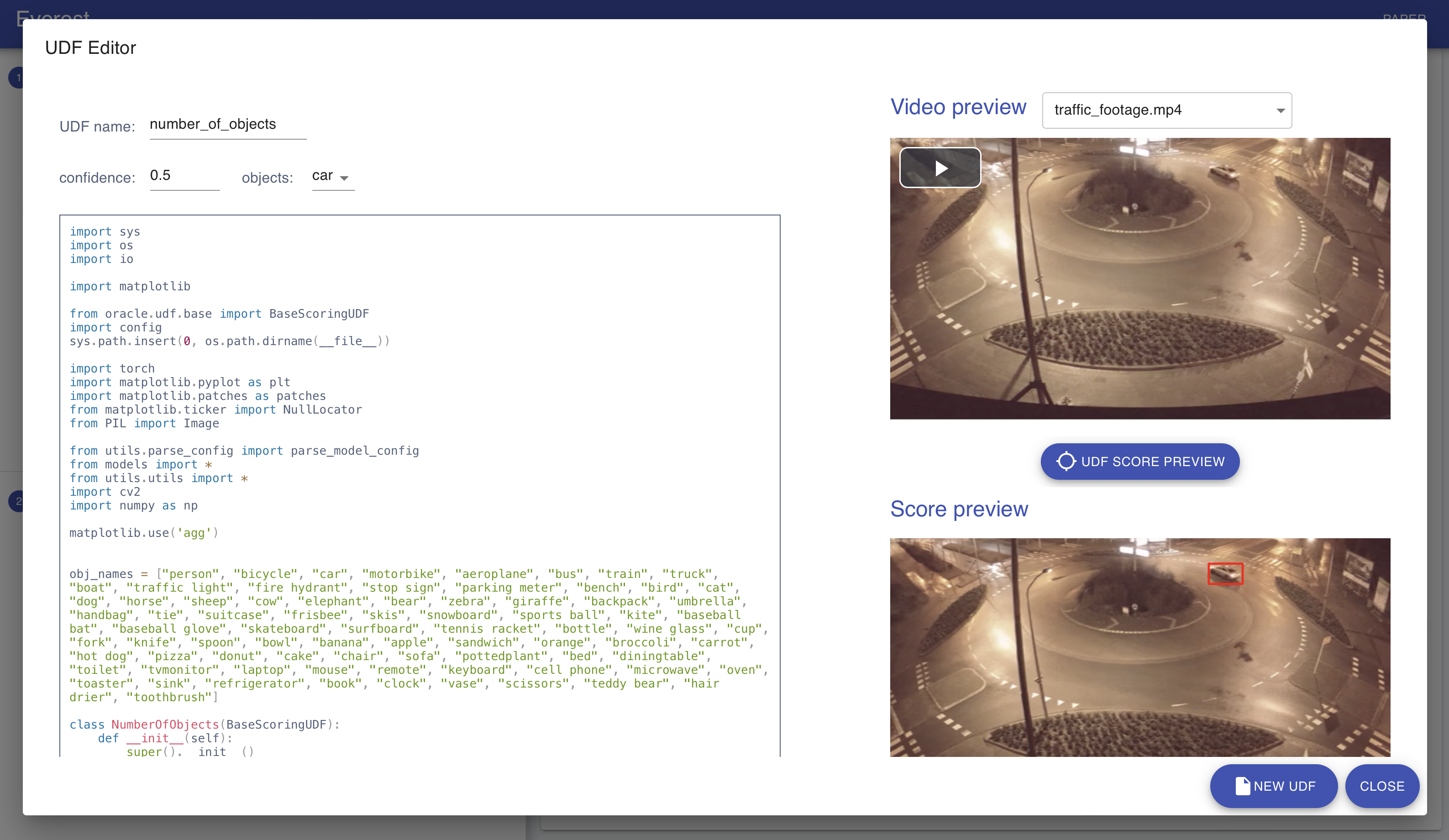

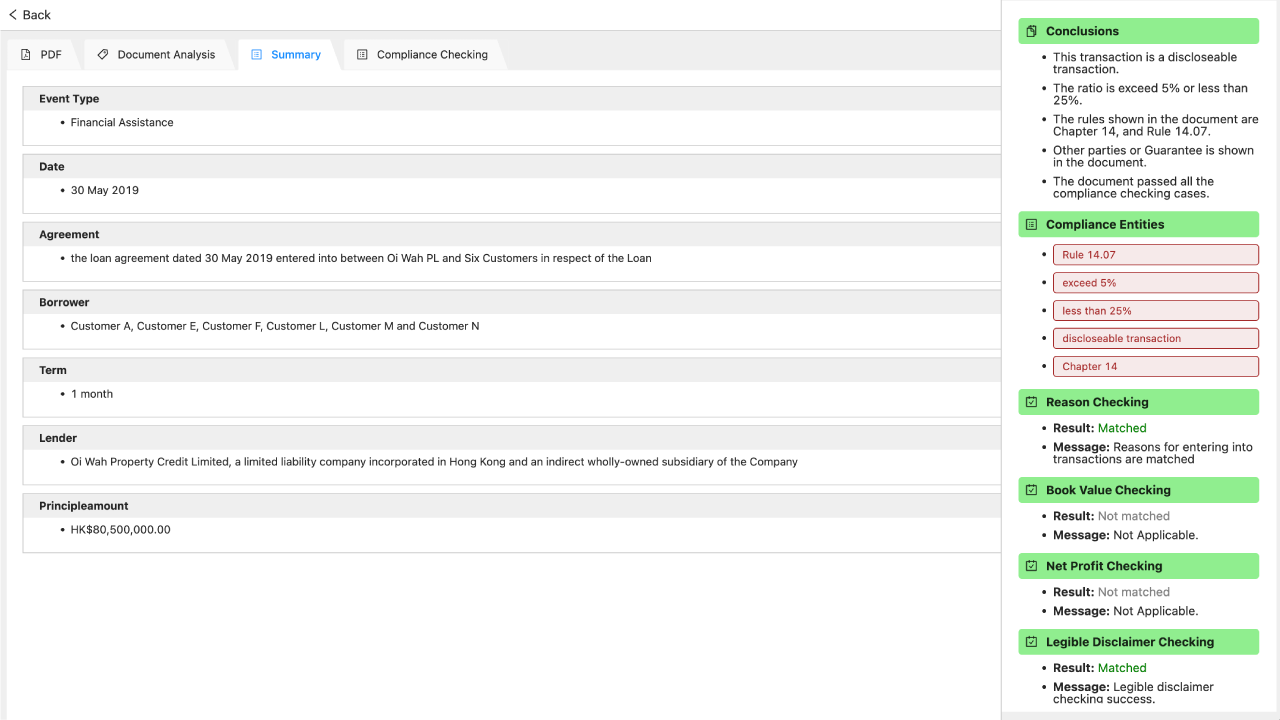

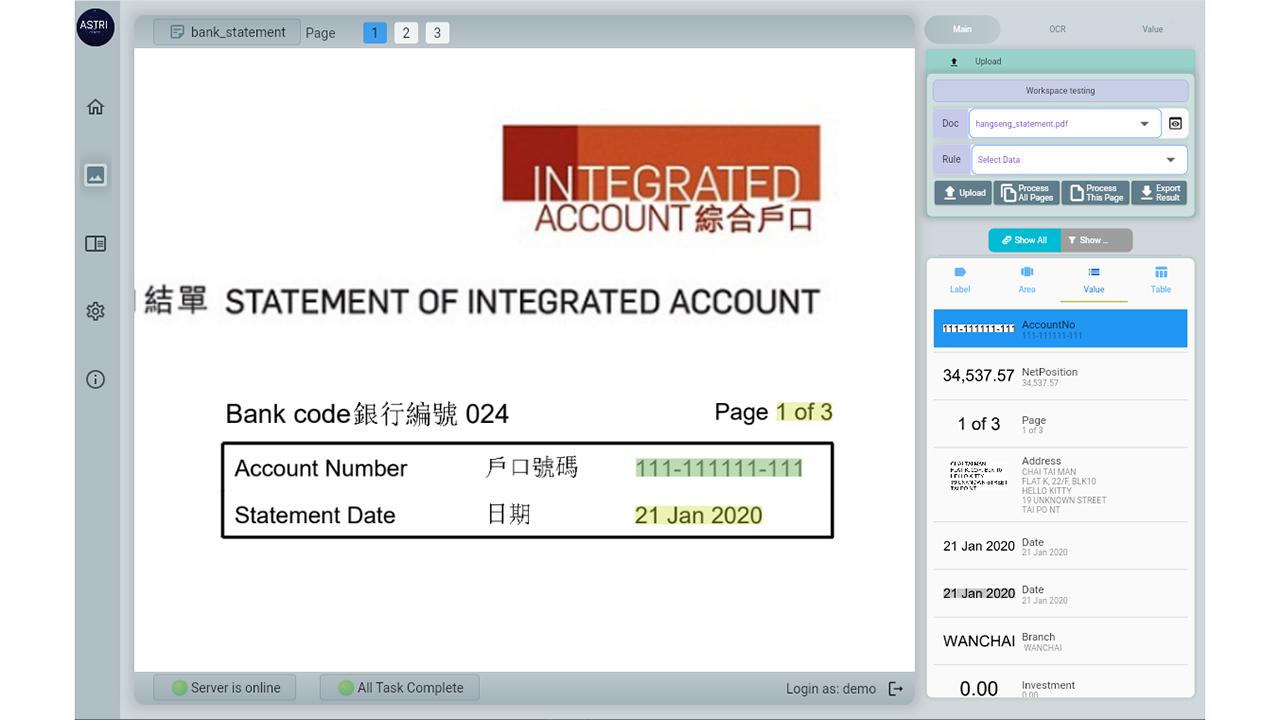

ASTRI developed an integrated security framework to safeguard enterprises using Large Language Models (LLMs) against sensitive data leakage and harmful outputs. The system combines user-defined sensitive data taxonomy, domain-specific model fine-tuning, format-preserving encryption (FPE), and real-time policy management to ensure confidential information is protected and AI adoption remains compliant and reliable.

As LLMs are adopted across finance, legal, and education, risks of data leakage and undesirable responses grow. Existing LLMs lack precise, organization-specific sensitive data detection, cannot anonymize data while preserving contextual semantics, and do not allow for dynamic policy customization, resulting in security, compliance, and trust challenges.

- User-configurable sensitive data taxonomy and policy management enable cross-industry, cross-department security needs, with dynamic adjustment of detection rules.

- Domain-specific LLM for Security (DLMS) integrates parameter-efficient fine-tuning (LoRA) for accurate detection and classification of multiple sensitive entities.

- Format-preserving encryption (FPE) anonymizes sensitive data without altering structure or contextual semantics, balancing data utility and privacy compliance.

- Dramatically reduces risks of sensitive data leaks and unsafe LLM outputs, enhancing organizational data security and compliance.

- Dynamic strategies and customizable policies respond instantly to evolving regulations and industry needs, supporting scalable deployment.

- Modular architecture integrates easily with existing IT systems and can be tailored for different industries and use cases.

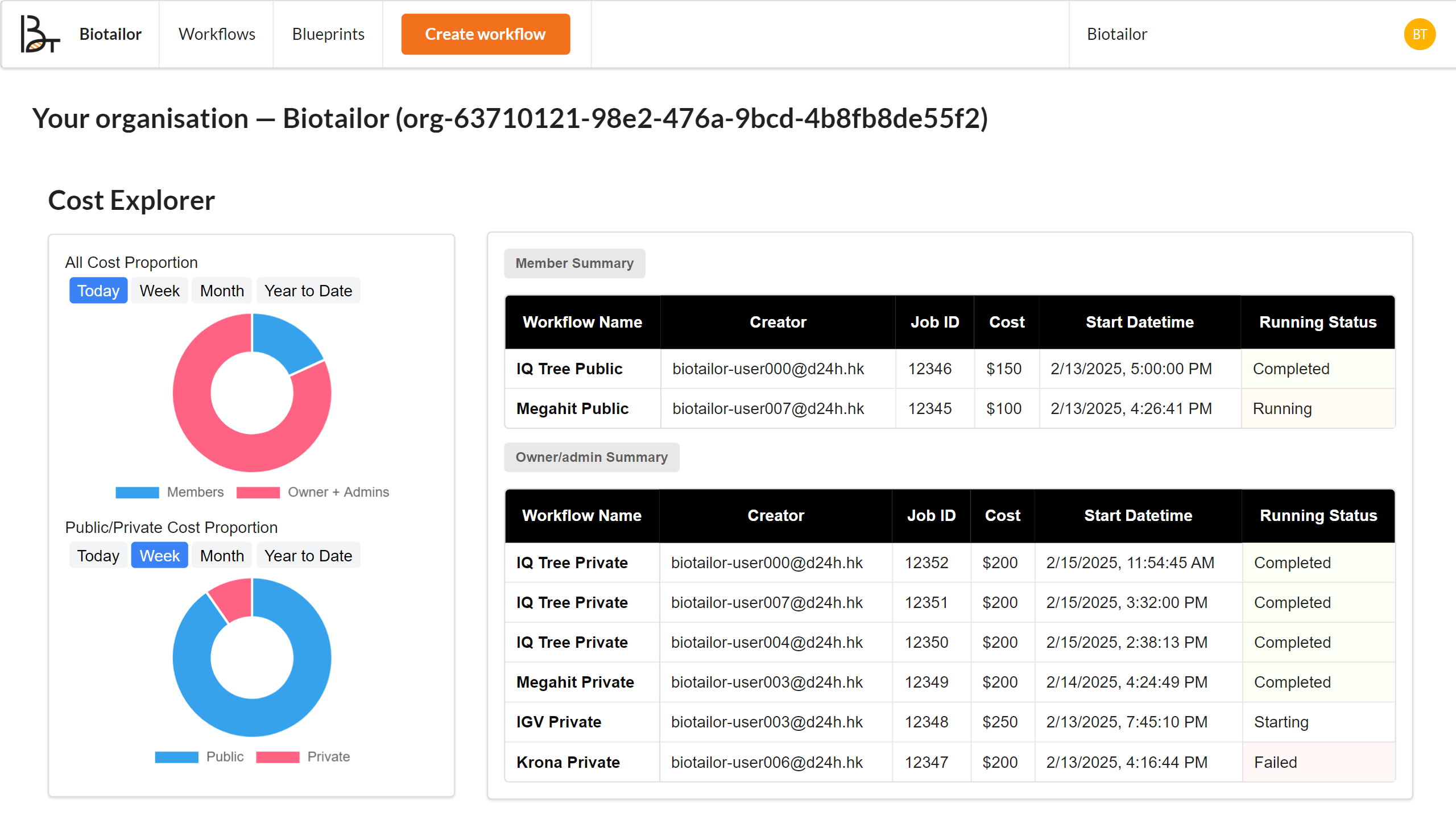

- Administrator interface and dashboard support real-time monitoring, alerting, and comprehensive compliance reporting, improving operational transparency.

- Sensitive data protection in enterprise LLM usage

- AI compliance solutions for finance, healthcare, and legal sectors

- Data privacy protection, anonymization, and automated audit workflows

- Secure monitoring and policy enforcement for AI responses

Patent

- US Application. No. 19/189,211; CN Patent application in process

Hong Kong Applied Science and Technology Research Institute (ASTRI) was founded by the Government of the Hong Kong Special Administrative Region in 2000 with the mission of enhancing Hong Kong’s competitiveness through applied research. ASTRI’s core R&D competence in various areas is grouped under four Technology Divisions: Trust and AI Technologies; Communications Technologies; IoT Sensing and AI Technologies and Integrated Circuits and Systems. It is applied across six core areas which are Smart City, Financial Technologies, New-Industrialisation and Intelligent Manufacturing, Digital Health, Application Specific Integrated Circuits and Metaverse.